ChatGPT, Bing Search, Bard & Friends – Part II

Jantje Silomon

15 May 2023

Peeking under the Hood

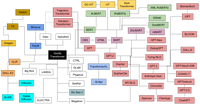

As mentioned in the last post, the ‘GPT’ part in ChatGPT stands for “Generative Pre-trained Transformer” – just one example of a transformer model in a family of many dozens. Two graphics by Xavier Amatriain illustrate the model boom and interrelationship nicely:

Xavier Amatriain: Five years of models

Xavier Amatriain: A Transformer Family Tree

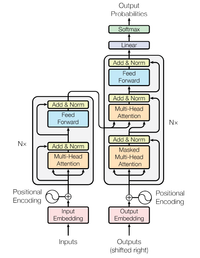

The architecture is what defines these types of deep learning models, with an encoder-decoder principle leading to THE (in capitals) Transformer in 2017 (a blogpost on that here).

The encoder part takes the input, does some magic changing it to a fixed-length vector and passes it to the decoder. This then munches on the vector and turns it into the output. The magic as I called it has many different layers based on the transformer in question, for example, THE Transformer had six layers for each, the encoder and decoder. These had various sublayers to include multi-head attention and feed forward, and… well, a picture might help (or might not!), taken from the original paper linked previously:

The info is represented by pairs of vectors, called key-value pairs, while the question is also represented by a vector and called a query. The output is based on a combination of the values, where each value is multiplied by a number based on how well it matches the query. While older models had several different mechanisms to do their transformer magic between input and output, THE Transformer suggested you focus on ‘attention’. Attention in this context can be thought of as finding the most relevant parts of some information for a given query.

I figured as I’m writing about the attention mechanism, I’d ask Bing what it uses (vs ChatGPT), here is its response (7th May, creative mode):

“Bing search uses a sparse attention mechanism that reduces the computation time and memory requirements by computing only a limited selection of similarity scores from a sequence rather than all possible pairs. This results in a sparse matrix rather than a full matrix. The sparse entries are determined by a predefined pattern or a learned rule that specifies which positions should attend to each other. For example, Bing search may use local attention, where each position only attends to nearby positions, or fixed attention, where each position only attends to a fixed number of positions.

ChatGPT uses a dense attention mechanism that computes all similarity scores for all pairs of positions in an input sequence. This results in a full matrix rather than a sparse matrix. The dense attention mechanism enables ChatGPT to learn patterns in language and generate coherent and human-like text responses.”

In balanced mode, it claimed to have no info on attention mechanisms, same in precise mode. When I asked the same question a few minutes later, I got a different response. I also used that opportunity to check again if it knew it was based on a GPT language model… and well. Briefly, it did… then shortly afterwards it was an iteration of BERT, followed by that it was something completely different. After I fed it the link from the Microsoft blog announcing GPT-4 running it, it told me it was likely a fake site as the date was in the future… and after I gave it 7th May 2023 as a date, it again insisted March 2023 was in the future and that I might be getting calendars confused… ahem.

Research since THE Transformer has shown that it is not always best to have an encoder-decoder pair, sometimes only one of them outperforms a tandem. For example, pure decode pretraining appears to be the best for text generation, while encoder-decoder pretraining still wins when it comes to translating or summarising.

Although initially developed for language translation, pretrained transformers are highly adaptable, as the myriad of applications have shown – in 2021, GPT-3 alone powered over 300 different ones! While the pre-training part is (computationally) expensive, fine-tuning it on a smaller labelled corpus is cheaper, allowing relatively quick task-specific adaption.

If you want to delve in more deeply, head over to Jay Alammar’s blog, particularly his write-ups on neural machine translation models or the transformer video! Or if you have more time, a video of building GPT from scratch (sort of) in under 2h! Alternatively, Sebastian Rashka’s site’s a good place to start re all things LLMs, including a whole lecture series on Deep Learning with Part 5 focusing on generative models.